GenAI Adoption in Built Environment Firms

Insights on AI adoption across Ireland and the UK - presented as a web-native report with a persistent contents panel for rapid navigation.

Executive Summary

The construction industry stands at a critical inflection point. While contributing $10 trillion to the global economy, the sector has limped along at 1% annual productivity growth - less than half the global average. GenAI offers the first credible solution to this decades-long stagnation, by significantly enhancing productivity, accuracy, and automation across nearly every project phase.

Introduction

Between July and September 2025, AI Institute carried out twenty-nine semi-structured interviews with senior representatives from engineering, architecture, quantity surveying, and construction firms across the UK and Ireland. The goal was to get a snapshot of where the industry is at in terms of AI adoption: who is winning and what does that look like?

The interviews explored current adoption patterns, implementation challenges, perceived and measured returns, and organisational readiness factors.

The research was augmented by a PRISMA-based screening of 57 high-quality papers of peer reviewed research into the UK/Irish built environment or comparable professional services sector, published between October 2020 and October 2025.

The result is what you are looking at now. We would like to thank those who took part and shared their very valuable experience and insights. The playbook for AI has not been written yet. You are helping others by sharing your experience.

What’s Really Happening with AI in Engineering, Architecture, and Construction

The productivity shift is measurable.

Built-environment firms using AI in daily workflows are reclaiming major time:

- Risk assessments: 157 mins → 36 mins

- 10,000-word reports: summarised by Copilot in 30 mins

- Bid preparation: 3 weeks → 3 days

These are not pilots - they are everyday live processes saving full work-weeks.

Smaller consultancies remain cautious. Large multinationals are bogged down by HQ approvals and standardised Copilot setups.

But mid-sized companies are surging ahead - with dedicated AI roles, dashboards, and real ROI tracking.

“We were impatient to get there quicker so we just started it.” - Architect practice, 500 employees

Tailored systems trained on internal data outperform off-the-shelf tools, reduce data security risks, and boost reliability and trust.

Measured gains:

- Quality +5.2%

- Relevance +9.4%

- Reproducibility +4.8%

The competitive edge now lies in what is built in-house.

Fear and policy inertia remain the strongest brakes.

- Only 20% of firms have a formal AI policy

- Fewer than one in three invest in literacy training

Progressive firms move fast because leaders sponsor the change. Others are held back due to unclear rules and lack of leadership.

Those who govern well, gain first.

The playbook for success is being written right now by the early movers - the firms that invested early in:

- Clear governance and policy

- AI-literate teams

- Custom or narrow AI solutions

They are already realising the benefits - and widening the competitive gap.

For everyone else, the cost of waiting is rising fast.

AI has become the new measure of operational maturity.

The Playbook for AI Success

We recommend a structured 3-step approach to realise GenAI’s full potential:

Three actions separate the firms getting real results from those still experimenting. This framework is built from evidence, and shows where to start, what to scale, and how to sustain momentum.

Create an AI policy led by senior leadership, not IT or Legal. Your policy must address what you believe about AI; what is expected of employees; what is permitted and what is not; and be honest about job intentions (augmentation, not replacement). It should also include guidance on how to behave and learn.

“This is not an IT problem to solve. This is an organisational problem to solve.”

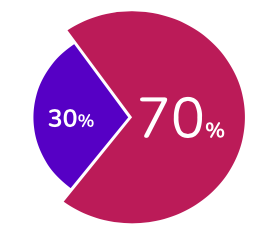

AI literacy is no longer optional. Without training, productivity gains evaporate and risks multiply. Only 30% of firms in our study have implemented company-wide training – leaving 70% exposed.

Four two-hour modules covering prompt engineering, data security, research methods and AI agents will equip your entire workforce with the core AI skills they need. Without training, productivity gains evaporate and risk multiplies.

Move beyond ad hoc or "shadow" use of public tools. Focus your investment where it matters most: the high-value, high-risk workflows that define your competitive edge: cost estimation, contract review, engineering validation and compliance reporting.

Develop secure, domain-specific AI solutions built on your proprietary data. This protects confidentiality, boosts precision and delivers performance that commercial tools simply cannot match.

Why This Matters Now

The sector is at an inflection point. AI capability is increasingly becoming a client expectation. In engineering, construction and government contracts, firms are already being assessed on their AI maturity.

Early adopters report:

Those building secure, in-house solutions today are defining tomorrow's delivery standards.

Conclusion

The playbook for successful AI adoption is now clear:

- Governance and policy from the top.

- AI-literate teams across the organisation.

- Investment in custom or narrow AI for the workflows that matter most.

The early movers are already benefiting, saving time, winning bids and redefining productivity. The gap between them and the rest of the market is no longer theoretical. It is measurable.

AI has become the new standard of operational excellence - and the firms acting now are setting the benchmark.

Appendix 2: AI Policy Template

Answer these questions and communicate:

What do we believe about AI?

What is expected of employees?

What is permitted and what is not?

We commit to legal and ethical AI usage, respecting the limitation of these technologies by:

Data Privacy

We ensure all our staff receive training and understand how data is used by the models and what kind of data is safe for sharing and where personal or proprietary data needs to be anonymised before use.

Responsible Use

All staff are required to adhere to the set of approved AI tools. We ensure that all staff are familiar with this policy which is continuously reviewed and updated to ensure adherence to regulations.

The current set of AI tools we currently utilise is as follows:

[ Insert your approved set of tools ]

These tools have been evaluated for their security, privacy, and compliance with GDPR and are deemed suitable for use in our activities.

As advancements are made and new tools emerge, we will update this list to reflect the most effective and secure options.

Bias Prevention

AI reflects the data it is trained upon, and we recognise the risk of perpetuating societal biases. At [ Insert your name here ] we are dedicated to producing AI-generated content that is inclusive and ethical. We customise our settings wherever possible to require diverse perspectives in our answers and we take active steps to identify and mitigate any biases, ensuring our content is accessible and fair to all.

Transparency

At [ Insert your name here ], we are committed to transparency about our AI usage. This is in accordance with Article 52 of the EU AI Act. We communicate our use of AI on our website. And our commitment to transparency is fundamental to our approach to ethical use.

Human Oversight

AI is a complementary tool, enhancing, not replacing, human ingenuity and judgement. All our AI-generated content goes through human review before being used anywhere in our material. This ensures quality and alignment with our educational standards.